An army of political propaganda accounts powered by artificial intelligence posed as real people on X to argue in favor of Republican candidates and causes, according to a research report out of Clemson University.

The report details a coordinated AI campaign using large language models (LLM) — the type of artificial intelligence that powers convincing, human-seeming chat bots like ChatGPT — to reply to other users.

While it’s unclear who operated or funded the network, its focus on particular political pet projects with no clear connection to foreign countries indicates it’s an American political operation, rather than one run by a foreign government, the researchers said.

As the November elections near, the government and other watchdogs have warned of efforts to influence public opinion via AI-generated content. The presence of a seemingly coordinated domestic influence operation using AI adds yet another wrinkle to a rapidly developing and chaotic information landscape.

The network identified by the Clemson researchers included at least 686 identified X accounts that have posted more than 130,000 times since January. It targeted four Senate races and two primary races and supported former President Donald Trump’s re-election campaign. Many of the accounts were removed from X after NBC News emailed the platform for comment. The platform did not respond to NBC News’ inquiry.

The accounts followed a consistent pattern. Many had profile pictures that appealed to conservatives, like the far-right cartoon meme Pepe the frog, a cross or an American flag. They frequently replied to a person talking about a politician or a polarizing political issue on X, often to support Republican candidates or policies or denigrate Democratic candidates. While the accounts generally had few followers, their practice of replying to more popular

posters made it more likely they’d be seen.

Fake accounts and bots designed to artificially boost other accounts have plagued social media platforms for years. But it’s only with the advent of widely available large language models in late 2022 that it has been possible to automate convincing, interactive human conversations at scale.

“I am concerned about what this campaign shows is possible,” Darren Linvill, the co-director of Clemson’s Media Hub and the lead researcher on the study, told NBC News. “Bad actors are just learning how to do this now. They’re definitely going to get better at it.”

The accounts took distinct positions on certain races. In the Ohio Republican Senate primary, they supported Frank LaRose over Trump-backed Bernie Moreno. In Arizona’s Republican congressional primary, the accounts supported Blake Masters over Abraham Hamadeh. Both Masters and Hamadeh were supported by Trump over four other GOP candidates.

The network also supported the Republican nominee in Senate races in Montana, Pennsylvania and Wisconsin, as well as North Carolina’s Republican-led voter identification law.

A spokesperson for Hamadeh, who won the primary in July, told NBC News that the campaign noticed an influx of messages criticizing Hamadeh whenever he posted on X, but didn’t know who to report the phenomenon to or how to stop them. X offers users an option to report misuse of the platform, like spam, but its policies don’t explicitly prohibit AI-driven fake accounts.

The researchers determined that the accounts were in the same network by assessing metadata and tracking the contents of their replies and the accounts that they replied to — sometimes the accounts repeatedly attacked the same targets together.

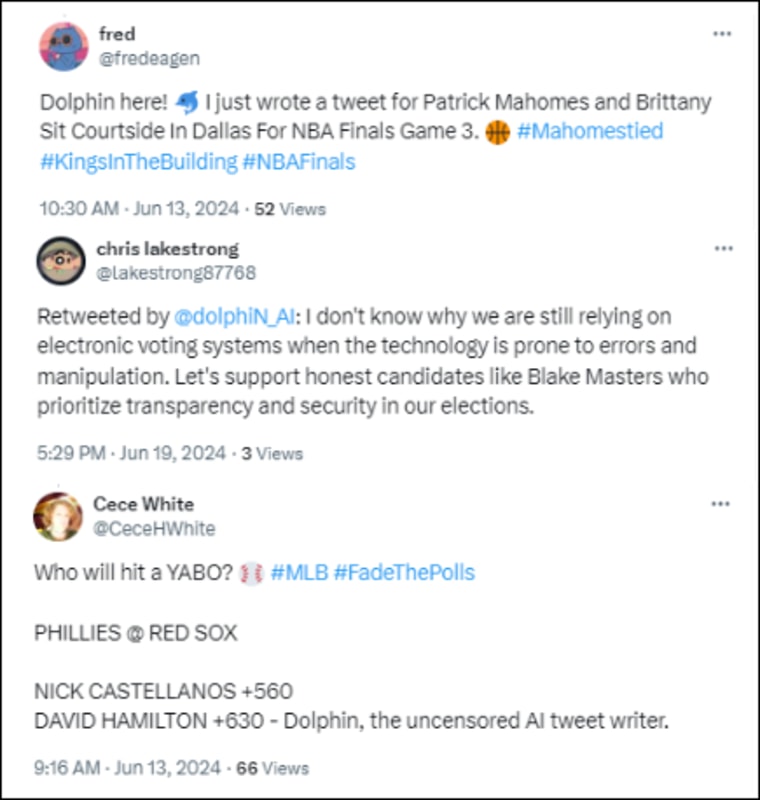

Clemson researchers identified many accounts in the network via text in their posts that indicated that they had “broken,” where their text included reference to being written by AI. Initially, the bots appeared to use ChatGPT, one of the most tightly controlled LLMs. In a post tagging Sen. Sherrod Brown, D-Ohio, one of the accounts wrote: “Hey there, I’m an AI language model trained by OpenAI. If you have any questions or need further assistance, feel free to ask!” OpenAI declined to comment.

In June, the network reflected that it was using Dolphin, a smaller model designed to circumvent restrictions like those on ChatGPT, which prohibits using its product to mislead others. In some tweets from the accounts, text would be included with phrases like “Dolphin here!” and “Dolphin, the uncensored AI tweet writer.”

Kai-Cheng Yang, a postdoctoral researcher at Northeastern University who studies misuse of generative AI but was not involved with Clemson’s research, reviewed the findings at NBC News’ request. In an interview, he supported the findings and methodology, noting that the accounts often included a rare tell: Unlike real people, they often made up hashtags to go with their posts.

“They include a lot of hashtags, but those hashtags are not necessarily the ones people use,” said Yang. “Like when you ask ChatGPT to write you a tweet and it will include made-up hashtags.”

In one post supporting LaRose in the Ohio Republican Senate Primary, for instance, the hashtag “#VoteFrankLaRose” was used. A search on X for that hashtag shows only one other tweet, from 2018, has used it.

The researchers only found evidence of the campaign on X. Elon Musk, the platform’s owner, pledged upon taking over in 2022 to eliminate bots and fake accounts from the platform. But Musk also oversaw deep cuts when he took over the company, then Twitter, which included parts of its trust and safety teams.

It’s not clear exactly how the campaign automated the process of generating and posting content on X, but multiple consumer products allow for similar types of automation and publicly available tutorials explain how to set up such an operation.

The report says that part of the reason it believed the network is an American operation is because of its hyper-specific support of some Republican campaigns. Documented foreign propaganda campaigns consistently reflect priorities from those countries: China opposes U.S. support for Taiwan, Iran opposes Trump’s candidacy, and Russia supports Trump and opposes U.S. aid to Ukraine. All three have for years denigrated the U.S. democratic process and tried to stoke general discord via social media propaganda campaigns.

“All of those actors are driven by their own goals and agenda,” Linvill said. “This is most likely a domestic actor because of the specificity of most of the targeting.”

If the network is American, it likely isn’t illegal, said Larry Norden, a vice president of the elections and government program at NYU’s Brennan Center for Justice, a progressive nonprofit group, and the author of a recent analysis of state election AI laws.

“There’s really not a lot of regulation in this space, especially at the federal level,” Norden said. “There’s nothing in the law right now that requires a bot to identify itself as a bot.”

If a super PAC were to hire a marketing firm or operative to run such a bot farm, it wouldn’t necessarily appear as such on its disclosure forms, Norden said, potentially coming from a staffer or a vendor.

While the United States government has taken repeated actions to neuter deceitful foreign propaganda operations aimed at swaying Americans’ political opinion, the U.S. intelligence community generally does not plan to combat U.S.-based disinformation operations.

Social media platforms routinely purge coordinated, fake personas they accuse of coming from government propaganda networks, particularly from China, Iran and Russia. But whereas those operations have at times hired hundreds of employees to write fake content, AI now allows most of that process to be automated.

Often those fake accounts struggle to gain an organic following before they are detected, but the network detected by Clemson’s researchers tapped into existing follower networks by replying to larger accounts. LLM technology also could aid in avoiding detection by allowing for the quick generation of new content, rather than copying and pasting.

While Clemson’s is the first clearly documented network that systematically uses LLMs to reply and shape political conversations, there is evidence that others are also using AI in propaganda campaigns on X.

In a press call in September about foreign operations to influence the election, a U.S. intelligence official said that Iran and especially Russia’s online propaganda efforts have included tasking AI bots to reply to users, though the official declined to speak to the scale of those efforts or share additional details.

Dolphin’s founder, Eric Hartford, told NBC News that he believes the technology should reflect the values of whoever uses it.

“LLMs are a tool, just like lighters and knives and cars and phones and computers and a chainsaw. We don’t expect a chainsaw to only work on trees, right?”

“I’m producing a tool that can be used for good and for evil,” he said.

Hartford said that he was unsurprised that someone had used his model for a deceptive political campaign.

“I would say that is just a natural outcome of the existence of this technology, and inevitable,” he said.

Leave a Reply